Entry #015

SOUND AND VISUAL DESIGN

Hello world,

at this point in the process, Im experimenting with the sound and visuals jointly, as it is time to slowly bring every thread together.

Elettra (the dancer) was so kind as to sketch out some moves based on the previous sound snippets I created.

Im very happy with the movements, visuals and sound. This is not the final of course, however a good direction.

This new sound snippet is created as a creative response to her dance movements. The next step is to do the sound and the movements in real-time with OSC connecting the dancer’s keypoints to some of the sound variables.

Im undecided between black and white feel or on the other hand, I could add some colours to it – maybe both.

SOUND AND VISUAL SNIPPET

March 18, 2021

Entry #014

SOUND DESIGN

Hello world,

I made some sound samples as a means of exploration of the direction of the sound in the artwork.

The title of the piece(Flow) made me think of the actual thing flow reminded me of – in the context of this artwork.

When I mean flow, I do not necessarily think exclusively about the flow of materials, e.g. like water or air, but also the flow of intervals, flow from one state to another, the flow of moments, sounds, fields, thoughts, mindset, outlook… etc.

Flow indicates a place or state one flows to another, and this creates duality. A from – to state. How we could perceive flow other than a linear duality?

If I try to think of – Transmutation as a flow of intervals, but it still appears in duality.

I like this sound bites, as it evokes some deeper feeling in me, something older than my awareness. It feels like it is coming from my deepest, darkest and wildest.

I will follow this thread in my creative research as a possible element in this artwork.

February 27, 2021

Entry #013

MODULE – ML POSE ESTIMATION.

Hello world,

This is a brief update on the progress of the project.

This project is about creating a space and a tool for human-machine interaction as a means for creating art.

Since the proposal, I manage to narrow down* the ML elements from the initial four, down to possible two (pose estimation and voice command), already incorporated into the base code.

The final piece going to be exhibited as documentation of an existed performance in the form of a multimedia piece.

The next steps are involving adding more expressive elements like flocking, building in sound synthesis and deciding the choreography (if any) and assigning which elements to control by both the code and the artist.

* the other two ML styles could potentially add to the artwork, however the contribution is not proportional to the complications they adding to it. Face detection would make more sense with more than one performing artist, as it could classify and deploy different aesthetics to each dancer. In this case, the object detection does not have any unique function, so it could be delegated to the pose estimation technique.

A short video with pose estimation and style transfer.

January 31, 2021

Entry #012

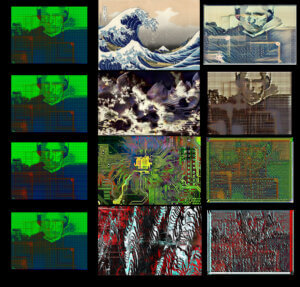

MODULE – ML Style Transfer.

Hello world,

This element of the artwork is about experimenting with style transfer. This “Machine Learning” technique does what is saying, transferring a style of one image to another one.

The “style image” and the “content image” ratio in the “result image” depends on the settings we impose on the model before training. It can warry from 1:1 ratio to anything 1.5:1, 1:2 and so on.

To find the right ratio is by no means is an easy task. It is a trial and error process, which could take hours and days to produce.

Finding mutually complimenting “style image” and the “content image” is also a matter of time and patience.

When all goes well, a model will produce something very unique and interesting – and all of a sudden it was worth it.

I have tried many style images for transfer, some worked better than others.

Transmutation – process.

January 10, 2021

Entry #011

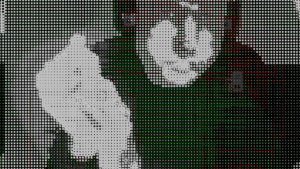

MODULE – ML Speech to Command.

Hello world,

This element of the artwork is testing speech commands. A “Machine Learning” technique for sound classification. It is done by analysing the sound fed through the ML model and making judgements on the voice of the detected subject. Estimating the words received through the microphone into the model, which then compares it for the best match with the words trained beforehand.

I was fiddling with the out of the box model from Google, but I will probably train my own model if I decide to use this technique in my final artwork. Google’s model is good enough for basic things, but biased and trained on other people, so the accuracy is not as great and personalised as it could get.

I was exploring the utility of giving commands via words in the context of this project. I imagine I will possibly assign some visual elements, activate modes of operation, give commands, change variable values or other functionality not foreseen at this point.

Thes visuals not resembling the aesthetic direction of the project, but simply illustrating an exercise.

Testing the machine learning model along with the visuals.

December 23, 2020

Entry #010

MODULE – Pose estimation with PoseNet.

Hello world,

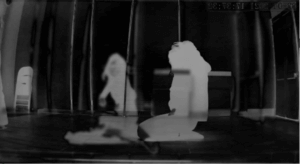

This element of the artwork is all about pose estimation. A “Machine Learning” technique for tracking human movement. It is done by analysing the image fed through the ML model and making judgements on the detected subjects skeleton’s position. This is done by estimating the position of 17 “key points” on the body.

I was exploring the meaning and utility of those key points in the context of this project. I imagine, I will assign some visual elements to those points as a means of aesthetic expression, but it will not limit to it.

In addition to manipulating the position of “key points”, I taught the model with ML technique of regression to react on certain poses with certain action(e.g. changing the background colour when some skeleton pose is detected). This can be very handy, as I can choose to influence some elements of the artwork with movement – as a means of giving commands.

While testing the functionalities of poseNet, took an opportunity to experiment with visuals. Not sure which aesthetic direction I will decide on, as it is way early for that to solidify.

Testing the machine learning model along with the visuals.

December 11, 2020

Entry #009

PROJECT PROPOSAL

Hello world,

After all that brainstorming, ideasitation and prototyping, I assambled a proposal for this project.

Knowing myself, this does not mean that the project can not evolve into something more or less than this proposal, however this is more like a framework for my creative process.

A proposal of this type is a tool for me to develope and manifest this piecev – to focus my imagination and resourses.

Setting approximate scope and timeline for the process, helps to keep perspective where I am at and what needs to be done at a given time.

What follows is the development of individual modules and testing them out.

Creative Project Proposal gpasz001

December 2, 2020

Entry #008

MORE EXPERIMENTATION

Hello world,

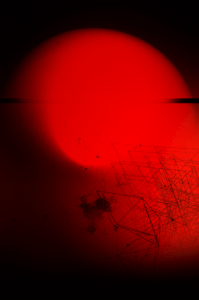

Here is some more experimentation, before I summon up a proposal.

I’m still playing with visual and conceptual elements at this stage, so I can, later on, draw on a rich material when I start bringing things together.

Here is the original photograph I took for this sketch. I named it “meditation #044“.

Let me know what you think there.

November 29, 2020

Entry #007

EXPERIMENTATION

Hello world,

This part of the process is getting the ideas and concepts aired out, to get a better understanding of the project’s direction.

I took the smoke element from the previous creative session and added a digital shadow to it.

This “digital shadow” or ” digital gaze” will be an overlay on the output media.

It is made of a few machine learning type algorithms, like face recognition/detection, poseNet(pose and movement recognition) and some pixel manipulation algorithm.

Conceptually, it is very interesting for me the idea of the real experience and representation of it in the form of visual data.

For example, I think I will use a dancer to explore movement, however, instead of displaying the dancer image/video directly, I intend to reveal some machine reading of the same, like the movement itself, or the data of the person, or a combination of all in intervals.

The images displayed here are highlighting the contrast in aesthetics of the real smoke and the machine’s gaze.

With this element, I’m exploring the phenomenon of classifying experience into data of any kind and calling – mistaking it for the experience itself.

Can we boil down, or represent things in full with data? – Even infinite amount of data?

There is a thing in sound synthesis, that if you have enough sin wawes, you could theoretically recreate any kind of sound or music, however, does all that amount of data retrospectively make you enjoy the music it’s trying to reproduce or represent?

Can data recreate any experience in full?

What is missing in translation?

While contemplating on this subject, I recognised that there is another side to this idea, which is to rather to trying to replace, we can enhance. I will create a user interface with the machine to move along with the human subject for performance sake.

So instead of either-or, I will aim for best from both.

Flow

ARVE Error: src mismatch

provider: youtube

url: https://www.youtube.com/watch?v=57iUT9Vb6g0

src in org: https://www.youtube-nocookie.com/embed/57iUT9Vb6g0?feature=oembed&width=840&height=1000&discover=1

src in mod: https://www.youtube-nocookie.com/embed/57iUT9Vb6g0?width=840&height=1000&discover=1

src gen org: https://www.youtube-nocookie.com/embed/57iUT9Vb6g0

November 24, 2020

Entry #006

PROTOTYPE – A

Hello world,

with this post, I’m heading towards getting a clearer concept and direction of this project.

This is PDF below represents the rough idea of the project ahead. I deliberately left it with the minimal description as it will probably change during the process.

I sketched out some visuals which represent ideas about the visual style. I aim to make it minimalistic, however, that is always a challenge for me, so we will see at the end.

I will use multiple codes running in real-time communicating with each other and along with this, there will be some pre-recorded media feeding into the codes.

The whole process will then feed into Resolume Arena via ManyCam and OBS Studio. From there I will project it on some surface – most probably a semi sculptural background.

I’m thinking to use these visual and conceptual elements mentioned above to track a dancer with computer vision and face detection(recognition) to create a short performance art.

I’m still undecided, whether I will conduct this live, or assemble it from prerecorded elements. This desition will be made when I see more of the project evolved down on the line as I can’t foresee the complexity and complications just yet.

Prototype A workflow map

November 7, 2020